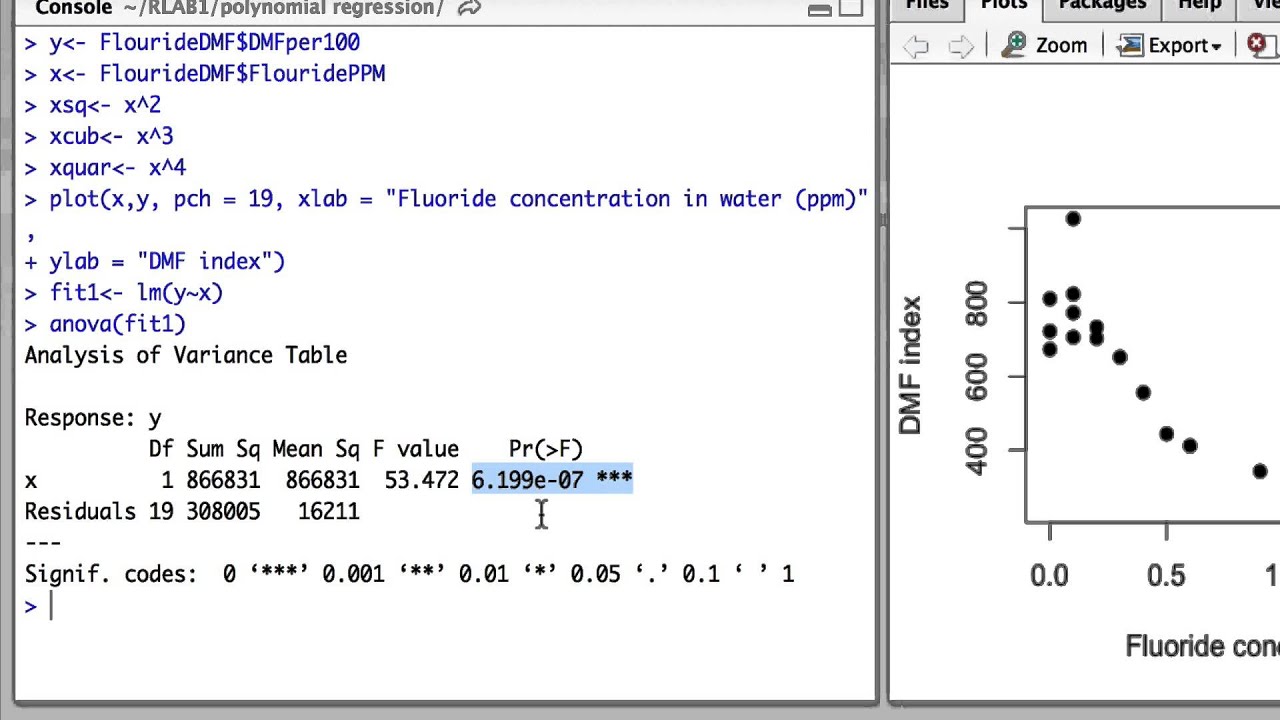

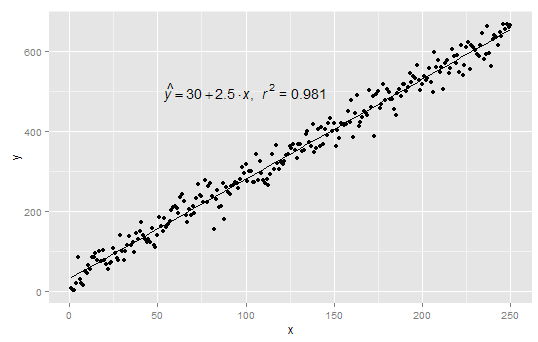

Root cause analysis: The size, direction (positive or negative), and statistical significance of each slope provides us with a better understanding of the factors that might cause variation in the value of the response variable \(Y\). The slopes learned by the linear regression algorithm can be used in two ways: We think of each \(\beta_i\) as the slope of the line (also called the “coefficient” or “parameter”). “Fitting a line” means finding values for each \(\beta_i\) so that the error (or “residual”) between the fitted line and the observed data is minimized. + \beta_n X_n\), where \(Y\) is the value of the response variable and \(X_i\) is the value of the explanatory variable(s). Recall that a linear model is of the form \(Y = \beta_0 + \beta_1 X_1 +. The lm function in R constructs-as its name implies-a linear model from data.

5.3 Recode According to List Membership.3.3.4 Relative frequency (more advanced).

2.1.3 Load the tidyverse package into R.

0 kommentar(er)

0 kommentar(er)